Week 2

| Class | C3W2 |

|---|---|

| Created | |

| Materials | |

| Property | |

| Reviewed | |

| Type | Section |

Error Analysis

Carrying out error analysis.

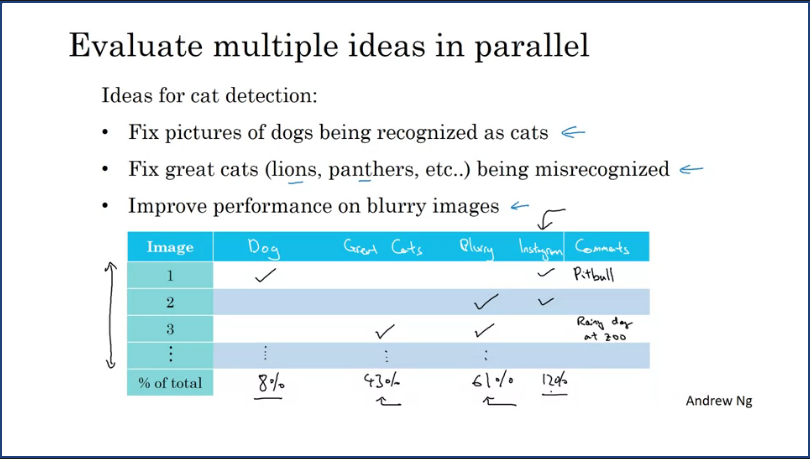

In order to carry out error analysis, you should find a set of mislabeled examples either in your dev set or training set. Look at the mislabeled examples for false positives and false negatives. Count the number of errors that fall into various categories and during this process, you might be inspired to generate new categories of errors. By cointing up the fraction of examples that are mislabeled in dofferent way often this will help you priorotise or give you inspiration to pursue a new direction. This quick error counting procedure can help you make better priorotization decisions and understand how promising different approaches are to work on.

Cleaning up incorrectly labeled data.

Data for a supervised learning problem comprises of input X and output labels Y. What if when going through the data you find that some of the output labels Y are incorrect meaning you have data which is incorrectly labeled, is it worth your while to gix up these labels?

- Consider the training set: DL algorithms are quite robust to random errors in the training set.

- However, they are less robust to systematic error: If your classifier incorrectly labels white dogs as cats, then the problem is because your classifier will learn to classify all white colored dogs as cats and this is one of the current issue with biased DL trained models.

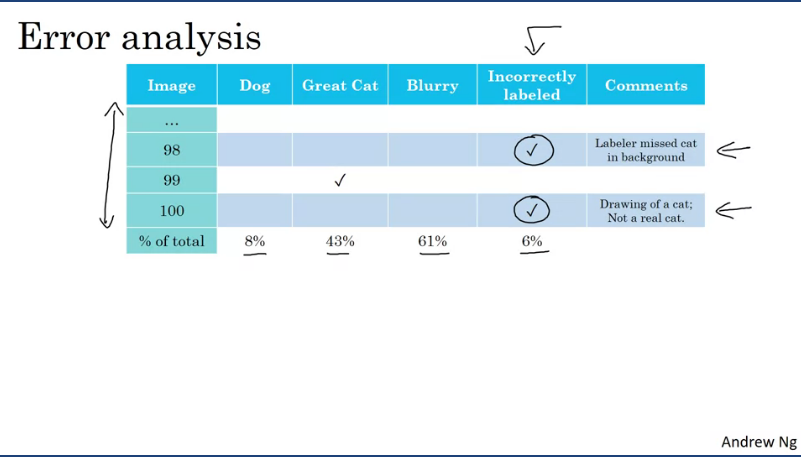

Recommendation: Create an error analysis table and add "incorrectly labeled" column.

Now looking at the image above, is it worthwhile spending more time trying to fix up the 6% of incorrectly labeled examples. Well, if it makes a significant difference to your ability to evaluate algorithms on your dev set, then consider spending more time to fix the incorect labels. One could start looking at:

- Overral dev set error

- Percentage of errors due to incorrect labels

- Errors due to other causes

Note: The goal of the dev set is to help you select between two classifiers A & B.

Guidelines for correcting incorrect dev/test set examples.

- Apply the same process to your dev and test sets to make sure they continue to come from the same distribution.

- Consider examining examples your algorithm got right as well as ones it got wrong.

- Train and dev/test data may now come from slightly different distributions. (It is super important for your dev/test set to come from the same distribution.)

Build your first system quickly, then iterate.

When working on a brand new machine learning application, one of the piece of advice often given is that, you should build your first system quickly and then iterate.

Key takeaways:

- Setup dev/test set and metric

- Build initial system quickly (Do not overthink or overcomplicate it)

- Use Bias/Variance analysis and Error analysis to priotitize next steps

Mismatched training and dev/test set

Training and testing on different distributions

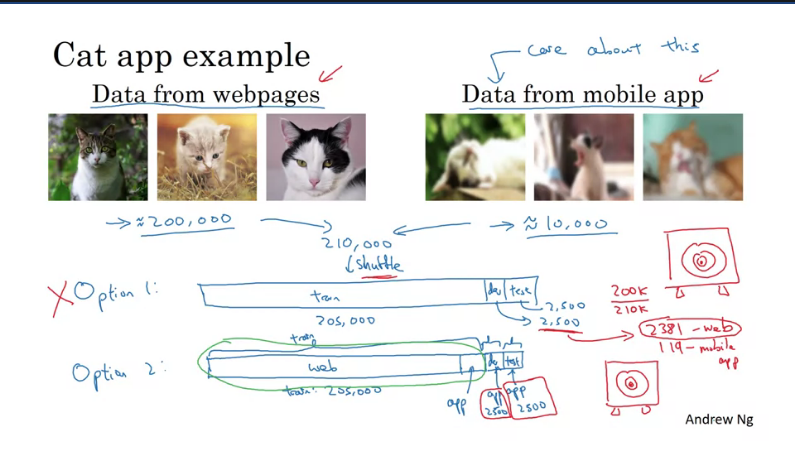

Suppose you are building a cat classifier mobile app,where users will upload amatuer images from the mobile app. In order to train your model you would need to source images both from the uploaded app and some from the internet (as you need more training data) which could be high resolution and proffesional.

Suppose you downloaded appx 200000 images from the internet and your users have only uploaded 10000 images(blurry and non prof) which your mobile app really cares about.

Now theres a dillema, as to you need your model to be trained on the same distribution of data but now you have data mismatch. Few guidelines with dealing with this issue:

- Add and train a subset of uploaded images together with the images from the internet and use the randomly shuffled uploaded images for dev/test.

- The advantage of splitting up your data into train, dev and test is that you're now aiming the target where you want it to be and the dev set contains data uloaded from the mobile app which is the distribution that you really care about. So in essense your mobile app should do well with minor error margings.

- The disadvantage is that your training distribution is different from your dev and test set distribution.

Key take away, is that It turns out that the above splitting will give better perfomance.

Bias and Variance with mismatched data distributions

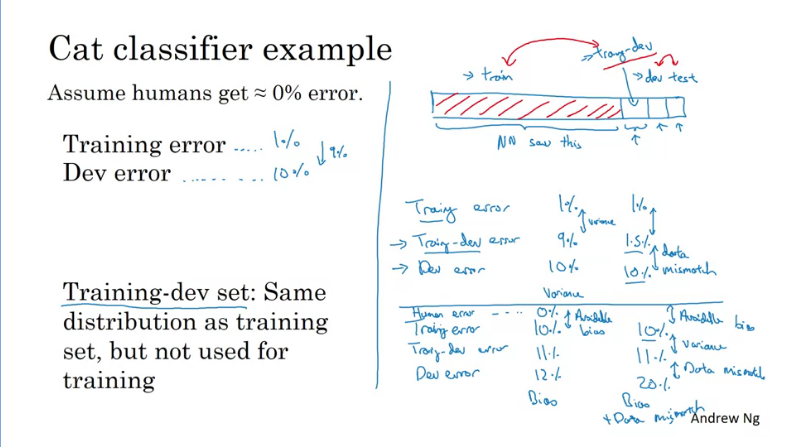

Estimating the bias and varience of your learning algorithm really helps you prioritize what you work on next but the way you analyse bias and varience changes when your training set comes from a different distribution than your dev and test sets.

Suppose we have our cat classifier and we get near perfect human error perfomance (All human's correctly identified the cats from the data), thus Bayes Optimal Error is nearly 0%.

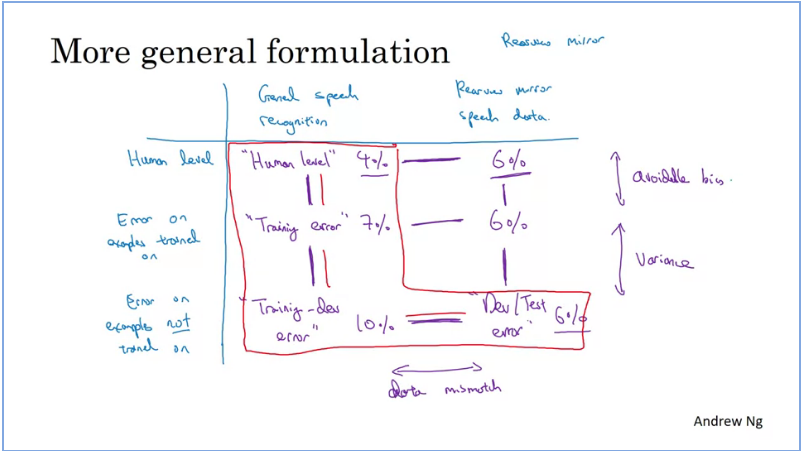

In order to do error analysis one would usually look at the training error as well as the dev set error. In the image above these errors are set to:

- Training error: 1%

- Dev error: 10%

if both training and dev set data came from the same distribution we would say that we have a large varience problem (The algorithm is not generalizing well from training set to dev set - perhaps it was trained on high res images and dev contained low and blurry images).

The problem with the analysis above is with the algorithm is that:

- Data in the dev set and training set come from different distributions

- The algorithm saw data in the training set but not in the dev set.

In order to clear the above effects above, we would need to create a new randomy selected set: Training-dev set, which is from the same distrubution as the training set but not used for training.

Then only train the algo on the training set and test on both training-dev set and dev set, thus minimizing the error rate.

Data mismatch occurs when your algorithm (as shown in the image above) error analysis between the training-dev set and dev set is high, due to the learning algorithm being trained only on the training set and it learned to do well on a different distribution than the distribution we really care about.

Examining the human level, training, training-dev and dev/test error can give you a better understanding as to how your algorithm is doing. And we have also seen that usin training data that comes from different distributuon as a dev and test set this could give tiy a lot more data and therefore help the perfomance of your learning algorithm. But instead of just having bias and varience potential problems you end up introducing data mismatch problem.

Addressing data mismatch

If your training set comes from a different distribution than your dev and test set and if error analysis shows that you have data mismatch problem, Guideline of things to try:

- Carry out manual error analysis to try to understand the difference between training and dev/test sets.

- Make training data more similar or collect more data similar to dev/test set (Using techniques such as Artificial data synthesis).

Learning from multiple tasks

Transfer learning

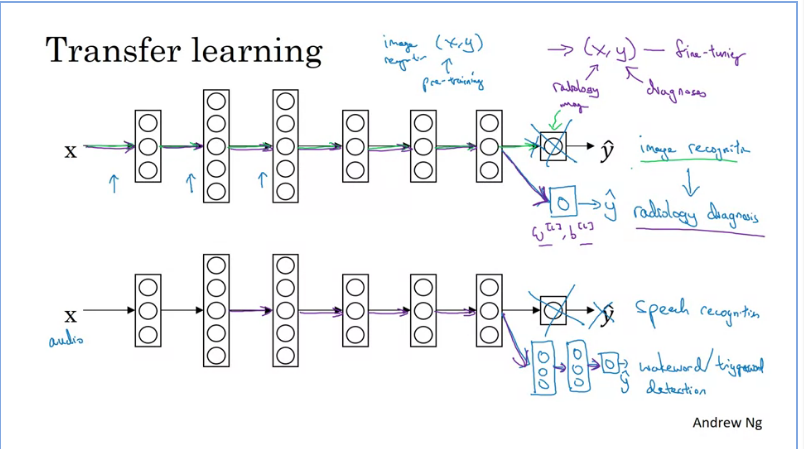

According to https://developers.google.com/machine-learning/glossary#transfer-learning, Transfer learning is the process of transferring information from one machine learning task to another.

For example, in multi-task learning, a single model solves multiple tasks, such as a deep model that has different output nodes for different tasks. Transfer learning might involve transferring knowledge from the solution of a simpler task to a more complex one, or involve transferring knowledge from a task where there is more data to one where there is less data.

Most machine learning systems solve a single task. Transfer learning is a baby step towards artificial intelligence in which a single program can solve multiple tasks.

When transfer learning makes sense?

- Task A and B have the same input X (images).

- You have a lot more data for Task A than Task B.

- Low level features from A could be helpful for learning B.

Transfer learning has been most useful if you're trying to do well on some Task B, usually a problem where you have relatively little data. So for example, in radiology, you know it's difficult to get that many x-ray scans to build a good radiology diagnosis system. So in that case, you might find a related but different task, such as image recognition, where you can get maybe a million images and learn a lot of load-over features from that, so that you can then try to do well on Task B on your radiology task despite not having that much data for it.

Multi-task learning

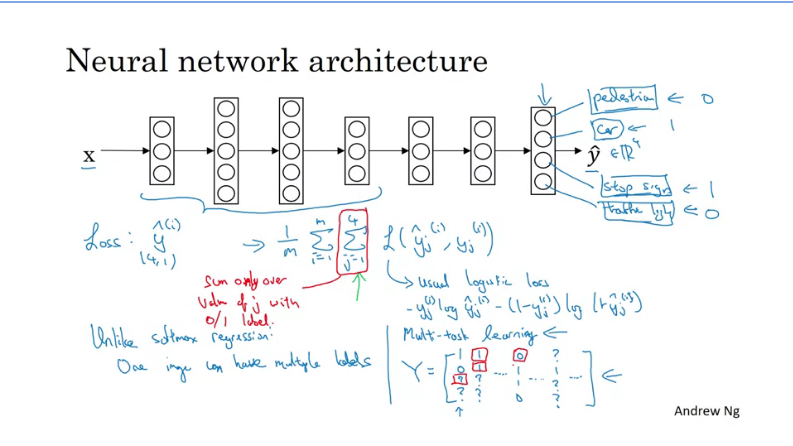

In multi-task learning, you start off simultaneously trying to have one neural network do several things at the same time and then each of these task helps all of the other tasks this improves better perfomance as compared to training individual nn in isolation.

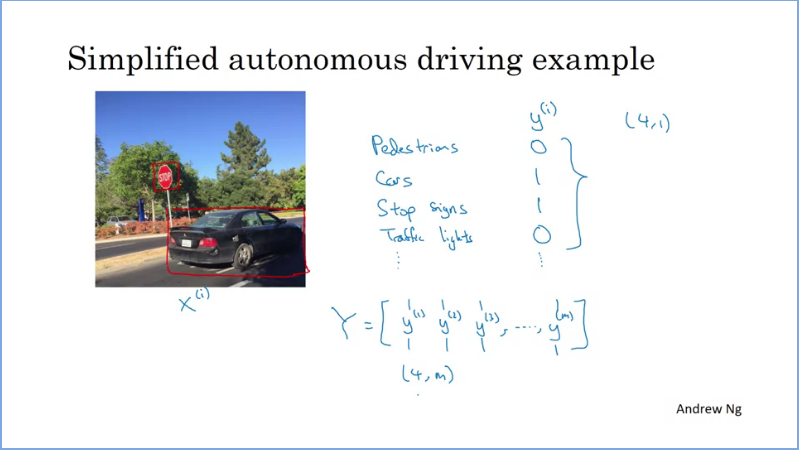

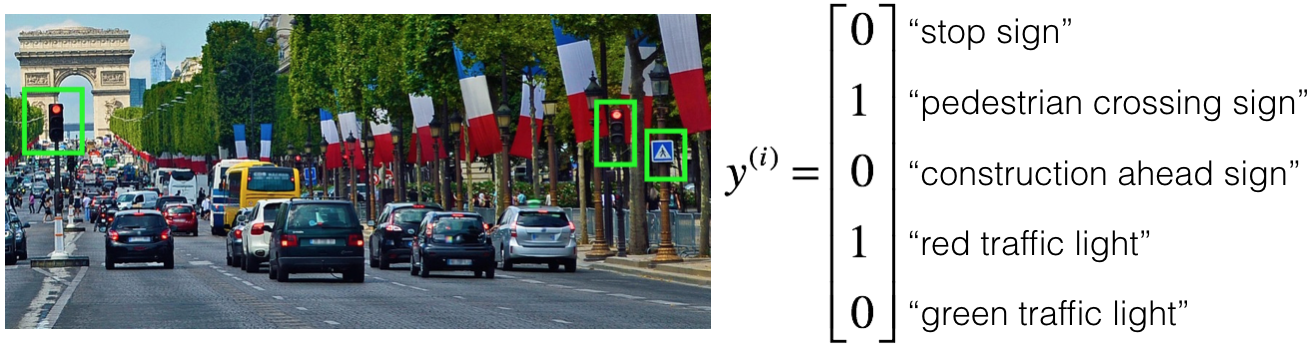

An example would be a simplified autonomous driving computer vision algorithm that detects pedestrians, cars, stop signs and traffic lights. You could train a nn that would output matrix with dimensions instead of the default

Unlike a softmax regression the nn architecture above can have multiple labels, so your nn architecture will have multiple probabilities i.e multiple classes/labels.

When does multitask learning make sense?

- Trainig on a set of tasks that could benefit from having shared lower-level features

- Usually: Amount of data you have for each task is quite similar.

- Can train a big enough neural network to do well on all tasks. (Alternatively, training individual nn for each task can lead to perfomance degradation)

End-to-end deep learning

What is end-to-end deep learning?

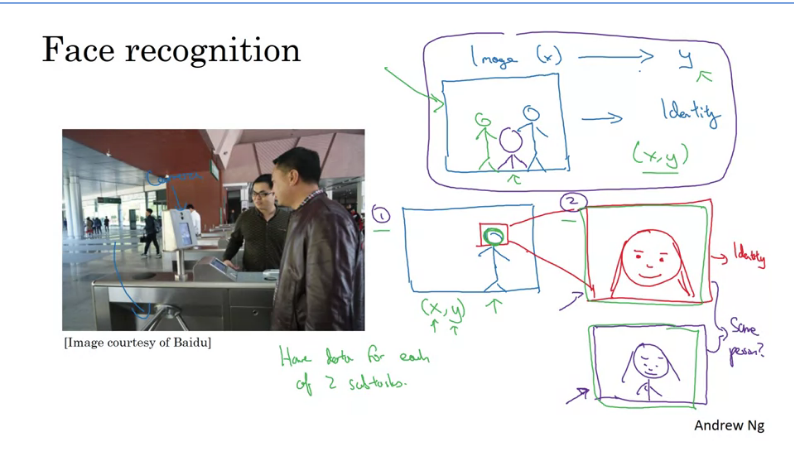

What end-to-end deep learning does is ot can take multiple stages and replace them usually with just a single nn.

- Multi-stage nn system

Let's take face recognition for example, breaking this application down into multistages increases better system perfomance were you detect a persons face then crop it and use it to match with faces in the database. This approach will not work efficiently on end-to-end deep learning unless there is large amount of data to compare to.

- End-to-end deep learning

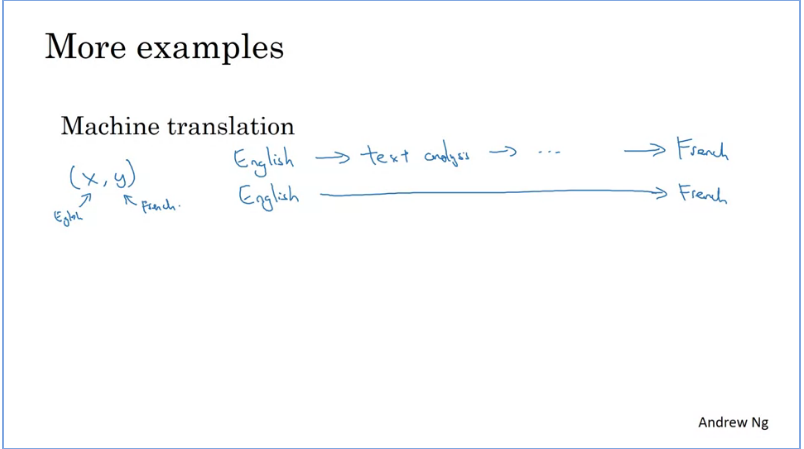

In this example, machine translation suppose translatiing from English to French works well with end-to-end mapping as compared to the native way of multistaging were it would go through feature extraction(data to number values) and so on.

The reason it works well, it's due to the large data sets of X-Y pairs where the English words correspond to French.

Whether to use end-to-end deep learning

Pros and cons of end-to-end deep learning

Pros:

- Let the data speak.

- Less hand-designing of components needed.

Cons:

- May need a large amount of data.

- Excludes potentially useful hand-designed components.

When do you apply end-to-end deep learning?

Key question: Do you have sufficient data to learn a function of the complexity needed to map x to y?

Q & A

Autonomous driving (case study)

- To help you practice strategies for machine learning, in this week we’ll present another scenario and ask how you would act. We think this “simulator” of working in a machine learning project will give a task of what leading a machine learning project could be like!

You are employed by a startup building self-driving cars. You are in charge of detecting road signs (stop sign, pedestrian crossing sign, construction ahead sign) and traffic signals (red and green lights) in images. The goal is to recognize which of these objects appear in each image. As an example, the above image contains a pedestrian crossing sign and red traffic lights

Your 100,000 labeled images are taken using the front-facing camera of your car. This is also the distribution of data you care most about doing well on. You think you might be able to get a much larger dataset off the internet, that could be helpful for training even if the distribution of internet data is not the same.

You are just getting started on this project. What is the first thing you do? Assume each of the steps below would take about an equal amount of time (a few days).

- Spend a few days training a basic model and see what mistakes it makes.

Justification: As discussed in lecture, applied ML is a highly iterative process. If you train a basic model and carry out error analysis (see what mistakes it makes) it will help point you in more promising directions.

- Spend a few days training a basic model and see what mistakes it makes.

- Your goal is to detect road signs (stop sign, pedestrian crossing sign, construction ahead sign) and traffic signals (red and green lights) in images. The goal is to recognize which of these objects appear in each image. You plan to use a deep neural network with ReLU units in the hidden layers.

For the output layer, a softmax activation would be a good choice for the output layer because this is a multi-task learning problem. True/False?

- False

Justification: Softmax would be a good choice if one and only one of the possibilities (stop sign, speed bump, pedestrian crossing, green light and red light) was present in each image.

- False

- You are carrying out error analysis and counting up what errors the algorithm makes. Which of these datasets do you think you should manually go through and carefully examine, one image at a time?

- 500 images on which the algorithm made a mistake

Justification: Focus on images that the algorithm got wrong. Also, 500 is enough to give you a good initial sense of the error statistics. There’s probably no need to look at 10,000, which will take a long time.

- 500 images on which the algorithm made a mistake

- After working on the data for several weeks, your team ends up with the following data:

- 100,000 labeled images taken using the front-facing camera of your car.

- 900,000 labeled images of roads downloaded from the internet.

- Each image’s labels precisely indicate the presence of any specific road signs and traffic signals or combinations of them. For example, means the image contains a stop sign and a red traffic light.

Because this is a multi-task learning problem, you need to have all your

vectors fully labeled. If one example is equal to then the learning algorithm will not be able to use that example. True/False?

- False Justification: As seen in the lecture on multi-task learning, you can compute the cost such that it is not influenced by the fact that some entries haven’t been labeled.

- The distribution of data you care about contains images from your car’s front-facing camera; which comes from a different distribution than the images you were able to find and download off the internet. How should you split the dataset into train/dev/test sets?

- Choose the training set to be the 900,000 images from the internet along with 80,000 images from your car’s front-facing camera. The 20,000 remaining images will be split equally in dev and test sets.

Justification: Yes. As seen in lecture, it is important that your dev and test set have the closest possible distribution to “real”-data. It is also important for the training set to contain enough “real”-data to avoid having a data-mismatch problem.

- Choose the training set to be the 900,000 images from the internet along with 80,000 images from your car’s front-facing camera. The 20,000 remaining images will be split equally in dev and test sets.

- Assume you’ve finally chosen the following split between of the data:

Dataset Contains Error of the algorithm Training 940000 images randomly picked from (900000 internet images + 60000 car’s front-facing camera images) 8.8 Training-Dev 20,000 images randomly picked from (900,000 internet images + 60,000 car’s front-facing camera images) 9.1 Dev 20,000 images from your car’s front-facing camera 14.3 Test 20,000 images from the car’s front-facing camera 14.8 You also know that human-level error on the road sign and traffic signals classification task is around 0.5%. Which of the following are True? (Check all that apply).

- You have a large data-mismatch problem because your model does a lot better on the training-dev set than on the dev set

- You have a large avoidable-bias problem because your training error is quite a bit higher than the human-level error.

- Based on table from the previous question, a friend thinks that the training data distribution is much easier than the dev/test distribution. What do you think?

- There’s insufficient information to tell if your friend is right or wrong.

Justification: The algorithm does better on the distribution of data it trained on. But you don’t know if it’s because it trained on that no distribution or if it really is easier. To get a better sense, measure human-level error separately on both distributions.

- There’s insufficient information to tell if your friend is right or wrong.

- You decide to focus on the dev set and check by hand what are the errors due to. Here is a table summarizing your discoveries:

In this table, 4.1%, 8.0%, etc. are a fraction of the total dev set (not just examples your algorithm mislabeled). For example, about 8.0/15.3 = 52% of your errors are due to foggy pictures.

The results from this analysis implies that the team’s highest priority should be to bring more foggy pictures into the training set so as to address the 8.0% of errors in that category. True/False?

Additional Note: there are subtle concepts to consider with this question, and you may find arguments for why some answers are also correct or incorrect. We recommend that you spend time reading the feedback for this quiz, to understand what issues that you will want to consider when you are building your own machine learning project.

- False because it depends on how easy it is to add foggy data. If foggy data is very hard and costly to collect, it might not be worth the team’s effort. Justification: correct: feedback: This is the correct answer. You should consider the tradeoff between the data accessibility and potential improvement of your model trained on this additional data.

- You can buy a specially designed windshield wiper that help wipe off some of the raindrops on the front-facing camera. Based on the table from the previous question, which of the following statements do you agree with?

- 2.2% would be a reasonable estimate of the maximum amount this windshield wiper could improve performance.

Justification: Yes. You will probably not improve performance by more than 2.2% by solving the raindrops problem. If your dataset was infinitely big, 2.2% would be a perfect estimate of the improvement you can achieve by purchasing a specially designed windshield wiper that removes the raindrops.

- 2.2% would be a reasonable estimate of the maximum amount this windshield wiper could improve performance.

- You decide to use data augmentation to address foggy images. You find 1,000 pictures of fog off the internet, and “add” them to clean images to synthesize foggy days, like this:

Which of the following statements do you agree with?

- So long as the synthesized fog looks realistic to the human eye, you can be confident that the synthesized data is accurately capturing the distribution of real foggy images (or a subset of it), since human vision is very accurate for the problem you’re solving.

Justification: Yes. If the synthesized images look realistic, then the model will just see them as if you had added useful data to identify road signs and traffic signals in a foggy weather. I will very likely help.

- So long as the synthesized fog looks realistic to the human eye, you can be confident that the synthesized data is accurately capturing the distribution of real foggy images (or a subset of it), since human vision is very accurate for the problem you’re solving.

- After working further on the problem, you’ve decided to correct the incorrectly labeled data on the dev set. Which of these statements do you agree with? (Check all that apply).

- You should also correct the incorrectly labeled data in the test set, so that the dev and test sets continue to come from the same distribution

Justification: Yes because you want to make sure that your dev and test data come from the same distribution for your algorithm to make your team’s iterative development process is efficient.

- You do not necessarily need to fix the incorrectly labeled data in the training set, because it's okay for the training set distribution to differ from the dev and test sets. Note that it is important that the dev set and test set have the same distribution.

Justification: True, deep learning algorithms are quite robust to having slightly different train and dev distributions.

- You should also correct the incorrectly labeled data in the test set, so that the dev and test sets continue to come from the same distribution

- So far your algorithm only recognizes red and green traffic lights. One of your colleagues in the startup is starting to work on recognizing a yellow traffic light. (Some countries call it an orange light rather than a yellow light; we’ll use the US convention of calling it yellow.) Images containing yellow lights are quite rare, and she doesn’t have enough data to build a good model. She hopes you can help her out using transfer learning.

What do you tell your colleague?

- She should try using weights pre-trained on your dataset, and fine-tuning further with the yellow-light dataset.

Justification: Yes. You have trained your model on a huge dataset, and she has a small dataset. Although your labels are different, the parameters of your model have been trained to recognize many characteristics of road and traffic images which will be useful for her problem. This is a perfect case for transfer learning, she can start with a model with the same architecture as yours, change what is after the last hidden layer and initialize it with your trained parameters.

- She should try using weights pre-trained on your dataset, and fine-tuning further with the yellow-light dataset.

- Another colleague wants to use microphones placed outside the car to better hear if there’re other vehicles around you. For example, if there is a police vehicle behind you, you would be able to hear their siren. However, they don’t have much to train this audio system. How can you help?

- Neither transfer learning nor multi-task learning seems promising.

Justification: Yes. The problem he is trying to solve is quite different from yours. The different dataset structures make it probably impossible to use transfer learning or multi-task learning.

- Neither transfer learning nor multi-task learning seems promising.

- To recognize red and green lights, you have been using this approach:

(A) Input an image (x) to a neural network and have it directly learn a mapping to make a prediction as to whether there’s a red light and/or green light (y). A teammate proposes a different, two-step approach:

(B) In this two-step approach, you would first (i) detect the traffic light in the image (if any), then (ii) determine the color of the illuminated lamp in the traffic light. Between these two, Approach B is more of an end-to-end approach because it has distinct steps for the input end and the output end. True/False?

- False

Justification: Yes. (A) is an end-to-end approach as it maps directly the input (x) to the output (y).

- False

- Approach A (in the question above) tends to be more promising than approach B if you have a ________ (fill in the blank).

- Large training set

Justification: Yes. In many fields, it has been observed that end-to-end learning works better in practice, but requires a large amount of data.

- Large training set