Week 3

| Class | C2W3 |

|---|---|

| Created | |

| Materials | https://www.coursera.org/learn/deep-neural-network/lecture/dknSn/tuning-process |

| Property | |

| Reviewed | |

| Type | Section |

Hyperparameter tuning

Tuning processes

Guidelines on tuning or selecting the best hyperparameters for your model.

From very important to rather less:

- learning rate:

- beta:

- Number of hidden units

- Mini-batch size

- Number of layers

- Learning rate decay

- Adam optimizers hyperparameters ()

Key takeaways when selecting hyperparameters:

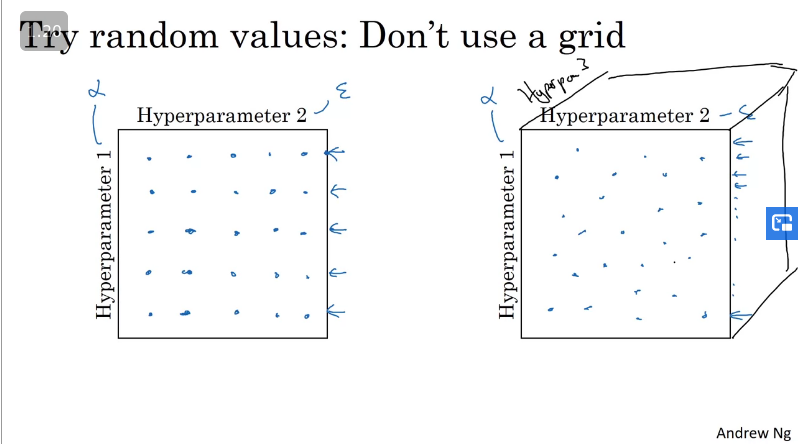

- Randomly sample values for your hyperparameters, instead of grid search.

- Consider course to fine search process for randomly sampling values.

Using an appropriate scale to pick hyperparameters

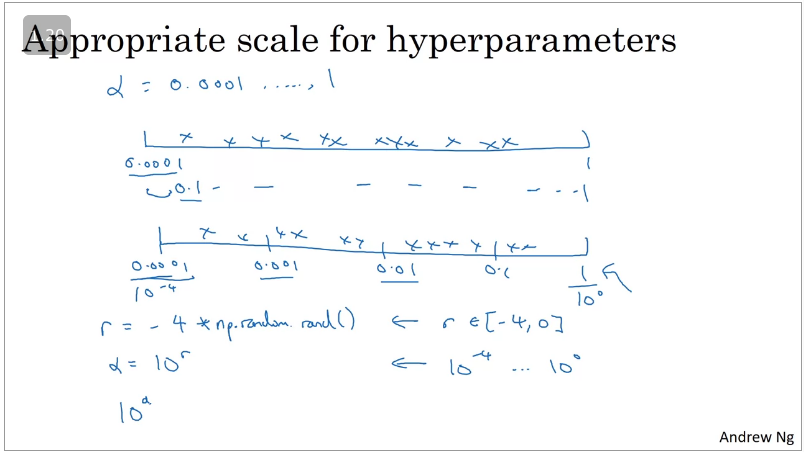

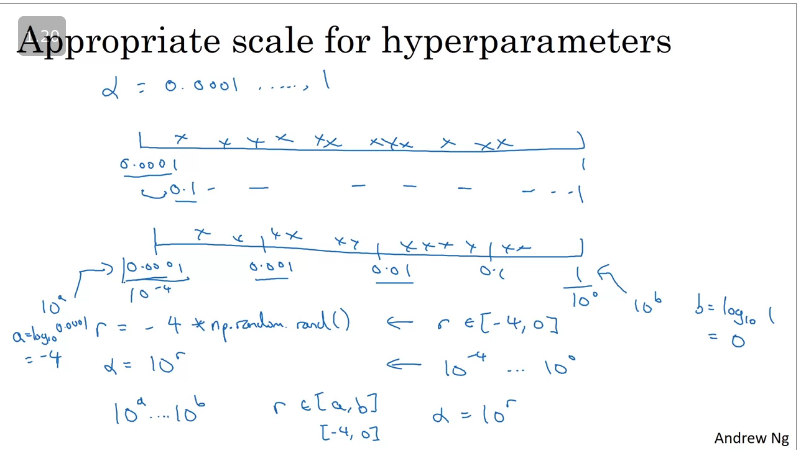

When randomly samplying hyperparameters values, it is inneficient to sample accros a whole range. Suppose we need to find a learning_rate value between , the first number line above illustrates that you would end up using 90% of the resources to sample between 0.1-1 and only 10% to sample from 0.0001-0.1.

Instead, instead of using a linear scale use a logarithmic scale. Thus you will end up sampling between 4 points as illustrated on the second number line.

Another tricky case is sampling hyperparameter for exponentially weighted averages.

Why shouldn't we use a linear scale when sampling:

- When beta is close to 1, the sensitivity of the results changes with every small changes to beta(eg: if beta goes from 0.999 to 0.9995, this wil have a huge impact on the algorithm)

- You end up sampling more densely which would be more resourceful

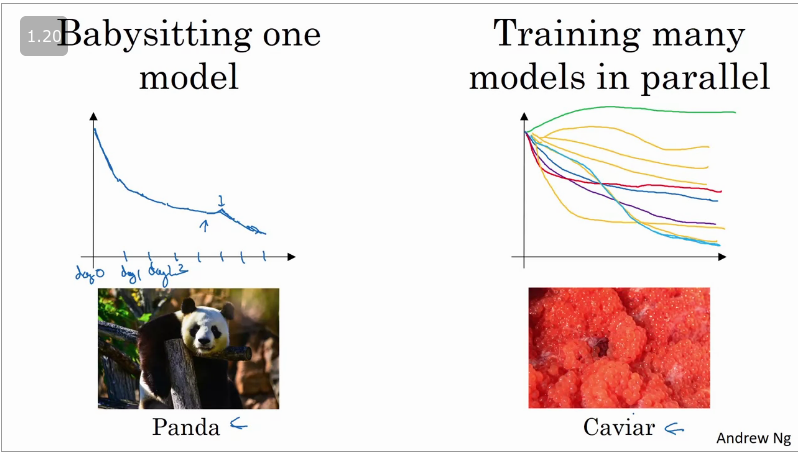

Hyperparameters tuning in practice: Pandas vs Caviar

Two major different ways in which people search/train models:

- Babysitting one model

- Not enough compuational capacity to train a lot of models at the same tme

- Training many models in parallel

- Enough computational resources to train multiple models in parallel.

Batch Normalization

Normalizing activations in a network

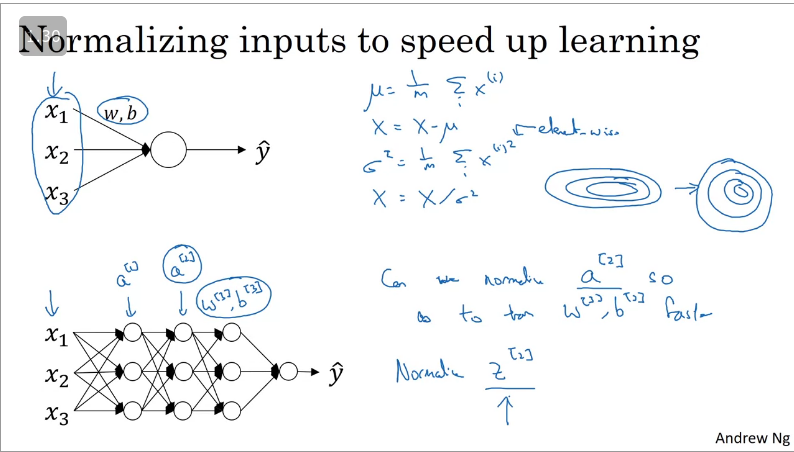

Batch normalisation makes your hyperparameter search problem much easier and more robust, it also enables you to train deeper neural networks.

When training a model such as logistic regression, normalizing the input features can speed up learning(), by computing the mean(), subtract of the mean's from the training sets and computing the varience (), summing (which is an element-wise squaring) and normalize the data set according to the variences.

The technique illustrated above works efficiently for linear models, how about for deep neaural networks. Well it turns out that on deeper models you not only have input features you also have activation functions . So if you want to trin the parameters then you can normalize the mean () and varience () of activation function to make the training more efficient, this is what batch norm does. However, in practice normalizing instead of activation function is the default choice.

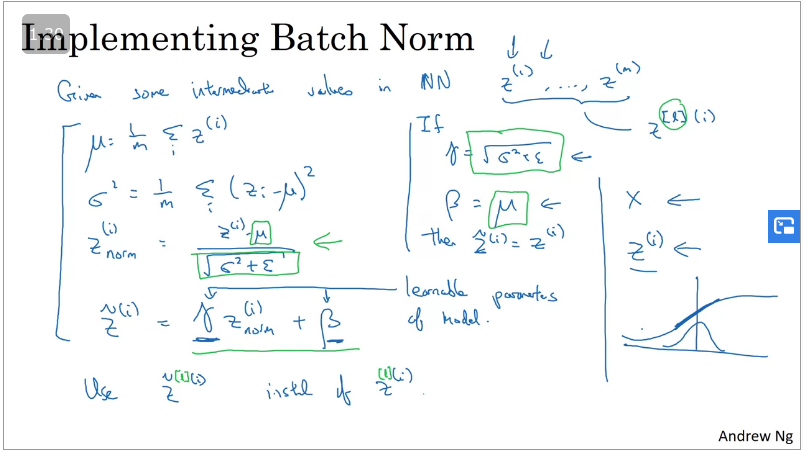

Implementation of batch normalization

- Compute the mean

- Compute the varience

- Compute

- Compute no rm

Note that the effect of gamma () and beta () allows you to set the mean of Z-tilda to be whatever you want it to be.

If gamma ()== 1 and beta () == 0, then Z-tilda == Z(i), so in essense those values should not be 1 and 0 respectively.

So for later computations you would use the instead of

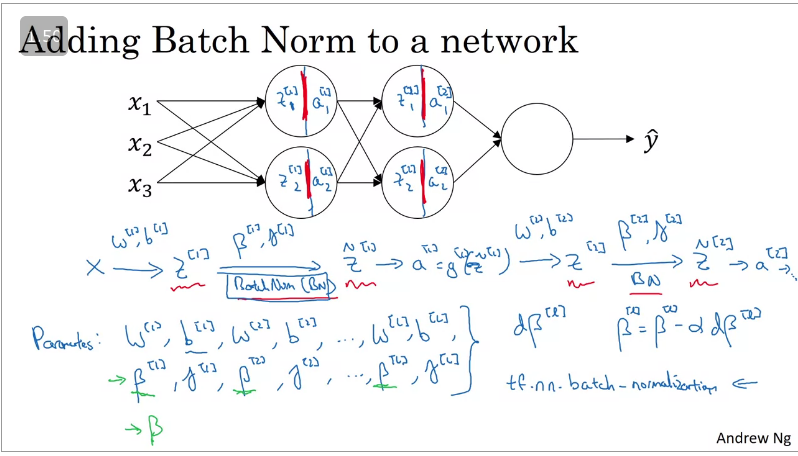

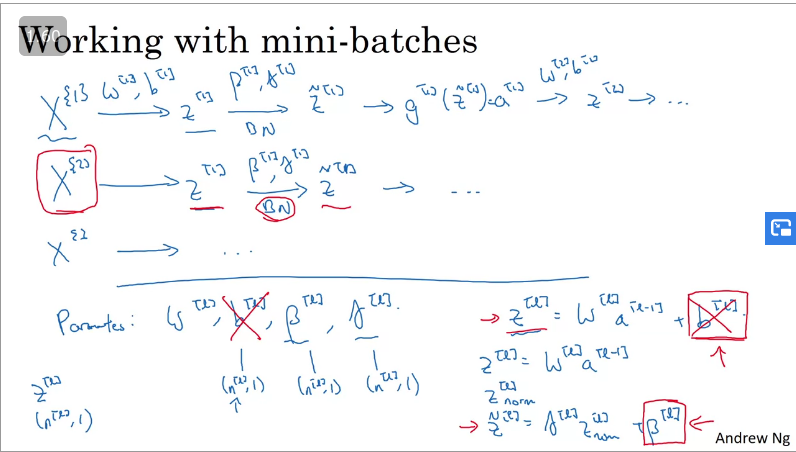

Fitting Batch Norm into a neural network

In practice you will not need to implement/calculate batch norm using the fomulas above. Deep learning frameworks such as TensorFlow offers that with just a single line of instruction: tf.nn.batch_normalization

In practice batch norm is applied when working with mini-batches

Note: when computing batch normalization you will not need the value of as any value of will get cancelled out by the mean substraction step. So when using batch norm can be set to 0.

So, the value of gets replaced by the shape will be

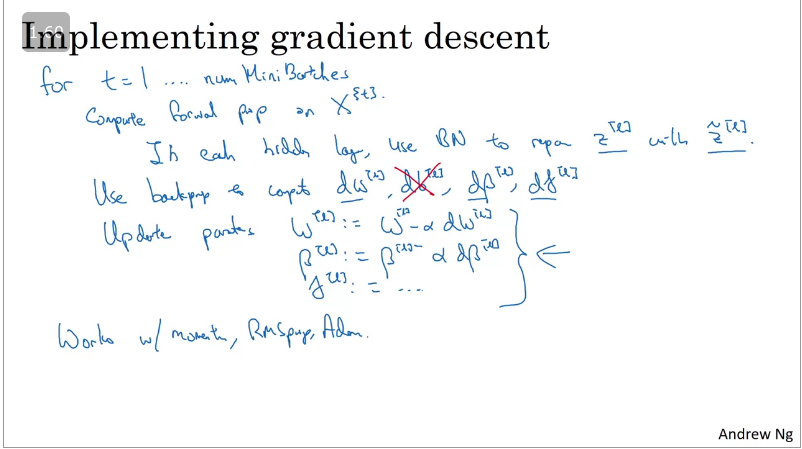

Implementing gradient descent with mini-batches and batch norm

Works with momentum, RMSprop, Adam optimizations

Why does Batch Norm work?

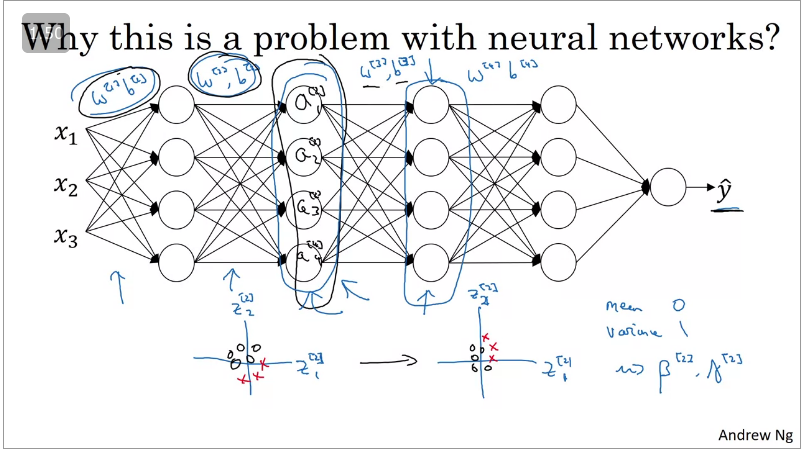

Batch norm works because it makes weights later or deeper in a neural network more robust to changes to weights in earlier layers of the neural network. For example weights on layer 10 are more robust than those in layer 1.

Covariate shift occurs when the distribution of input variables is different between training and testing dataset, meaning retraining is needed.

Batch norm reduces the amount of distribution of hidden unit values which shift around.

From the image above, what batch norm is saying is that the values of and can change and will change when the network updates the parameters in the earlier layers, but batch norm ensures that the mean and varience of and will remain the same. This limis the amount to which updating the parameters in the earlier layers can affect the distribution of values that the 3rd layer will see and thus learn.

Batch norm reduces the problem of input values changes thus causing the values to be more stable even though the input distribution changes, thus allowing each layer to learn indipendenctly.

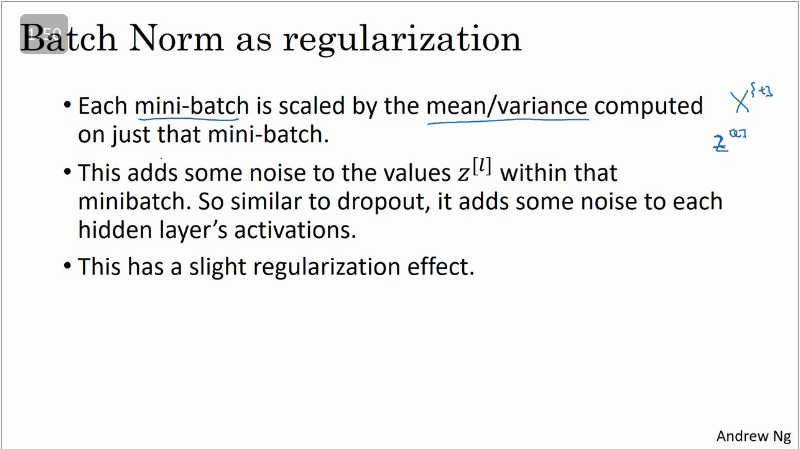

It turns out that Batch normalization also has a slight regularization effect as well.

Useful resource: http://mlexplained.com/2018/01/10/an-intuitive-explanation-of-why-batch-normalization-really-works-normalization-in-deep-learning-part-1/

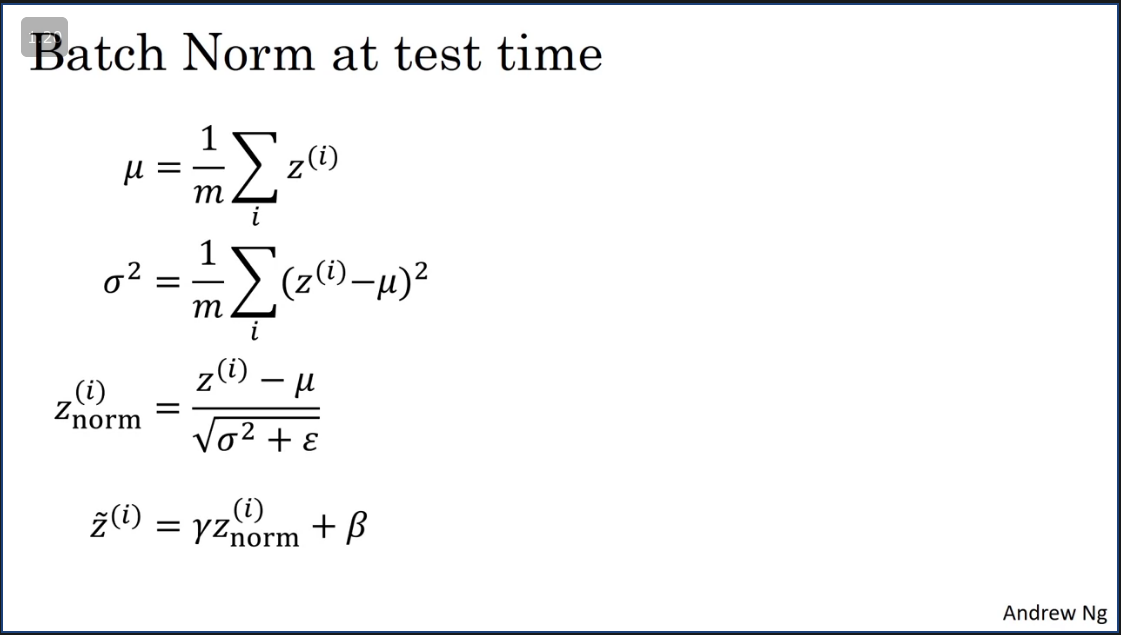

Batch Norm at test time

Key takeaway:

- During training time and are computed on an entire mini batch but at test time, you might need to process a single example at test time.

So, the way to do that is to estimate mu and sigma squared from your training set and there are any ways to do that. You could in theory run your whole training set through your final network to get mu and sigma squared

Multi-class classification

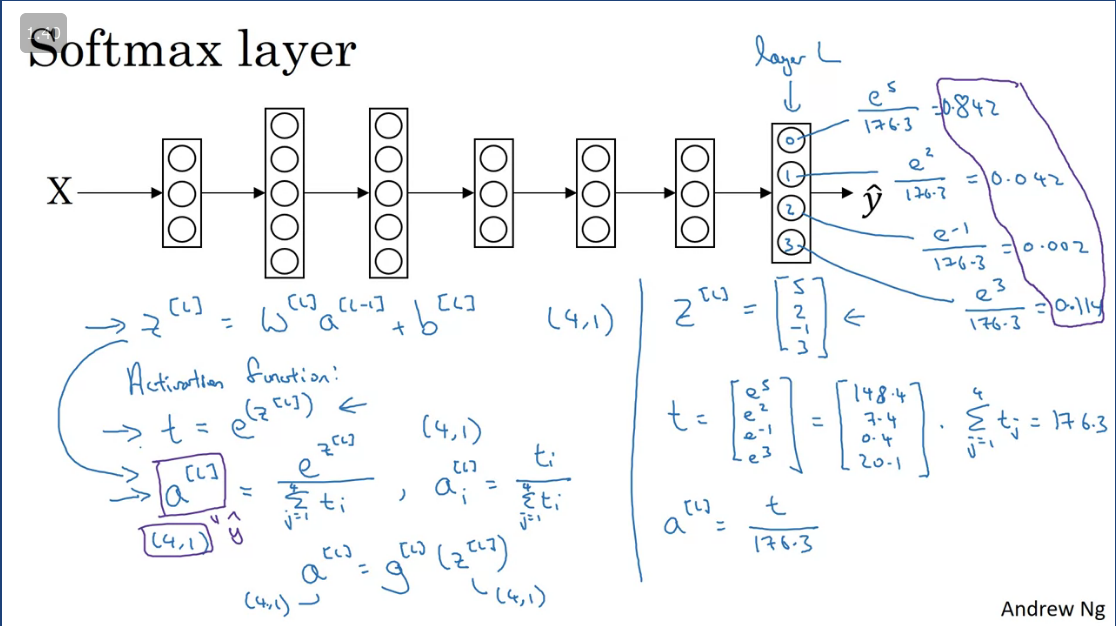

Softmax Regression

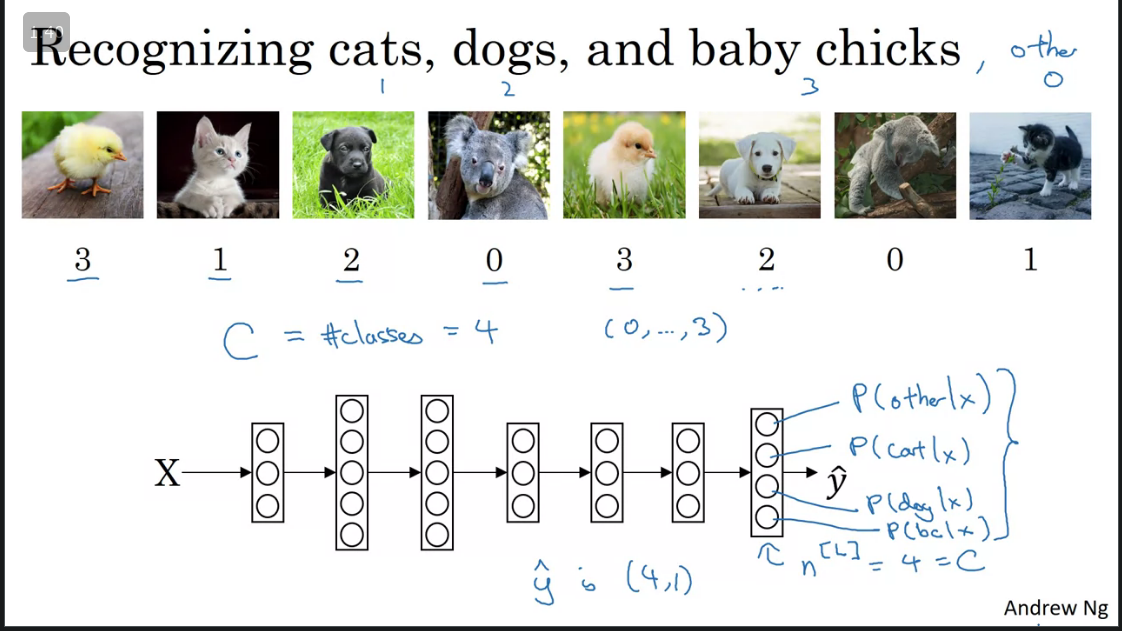

Previously we saw classification examples were it uses a binary classification where there's only 2 (1/0) possible outcomes. But what if there's multple possible classes.

Enter Softmax regression, according to developers.google.com, A softmax is a function that provides probabilities for each possible class in a multi-class classification model. The probabilities add up to exactly 1.0. For example, softmax might determine that the probability of a particular image being a dog at 0.9, a cat at 0.08, and a horse at 0.02. (Also called full softmax.)

Suppose you instead of just recognizing cats you want to recognize cats, dogs, and

baby chicks. Let's call cats class 1, dogs class 2, baby chicks class 3. And if none of the above, then there's an other or a none of the above class, which I'm going to call class 0.

So the nth () layer, which is the number of units upper layer which is layer L will equal 4 (since there's 4 classes i.e probabilities). So for the first output node (class 0) output we want it to output the probability that is the other class, given the input

- given the input , this will output probability there's a cat.

- give the input , this will output probability as a dog.

- give the input , that will output the probability as baby chick.

Therefore the output labels is going to be a dimension shape vector as it has to output 4 numbers outputing their probability and these probabilities should sum up to 1.

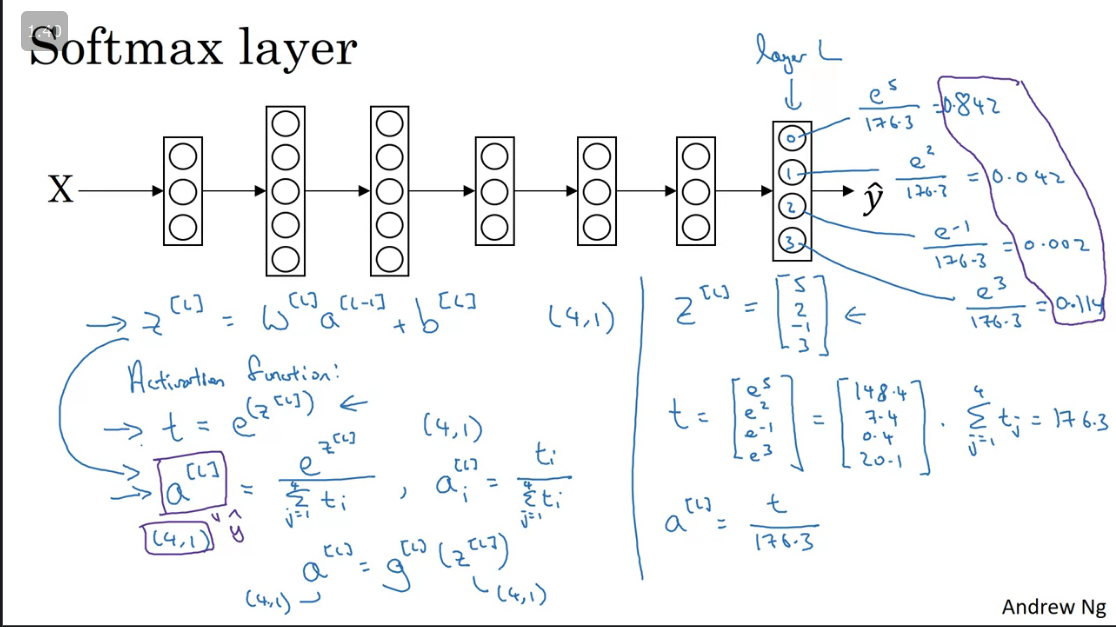

The standard model for getting the network to do this is called Softmax Layer/Activation.

This algorithm takes the vectors of and maps it to 4 probabilities which sums up to 1. So in summary a softmax activation layer can be represented as and the shape of both and will be

Training a softmax classifier

Q & A

- If searching among a large number of hyperparameters, you should try values in a grid rather than random values, so that you can carry out the search more systematically and not rely on chance. True or False?

- False

- Every hyperparameter, if set poorly, can have a huge negative impact on training, and so all hyperparameters are about equally important to tune well. True or False?

- False, justification: Yes. We've seen in lecture that some hyperparameters, such as the learning rate, are more critical than others.

- During hyperparameter search, whether you try to babysit one model (“Panda” strategy) or train a lot of models in parallel (“Caviar”) is largely determined by:

- The number of hyperparameters you have to tune

- If you think (hyperparameter for momentum) is between on 0.9 and 0.99, which of the following is the recommended way to sample a value for beta?

r = np.random.rand()

beta = 1-10**(- r - 1)- Finding good hyperparameter values is very time-consuming. So typically you should do it once at the start of the project, and try to find very good hyperparameters so that you don’t ever have to revisit tuning them again. True or false?

- False

- In batch normalization as presented in the videos, if you apply it on the lllth layer of your neural network, what are you normalizing?

-

- In the normalization formula why do we use epsilon?

- To avoid division by zero

- Which of the following statements about \gammaγ and \beta in Batch Norm are true?

- They can be learned using Adam, Gradient descent with momentum, or RMSprop, not just with gradient descent.

- They set the mean and variance of the linear variable of a given layer.

- After training a neural network with Batch Norm, at test time, to evaluate the neural network on a new example you should:

- Perform the needed normalizations, use \mu and \sigma^2 estimated using an exponentially weighted average across mini-batches seen during training.

- Which of these statements about deep learning programming frameworks are true? (Check all that apply)

- A programming framework allows you to code up deep learning algorithms with typically fewer lines of code than a lower-level language such as Python.

- Even if a project is currently open source, good governance of the project helps ensure that the it remains open even in the long term, rather than become closed or modified to benefit only one company.